4.5. "Mimematon" and the Mirrored Worlds of Our Future

How Artificial Intelligence Reinforces Our Self-Image – and Risks Trapping Our Awareness & Knowledge in Infinite Loops

We're all in the midst of a technological revolution where AI tools of all kinds are shaping everything from the way we write to the way we understand and move through the world - but few stop to ask what we really mean by its supposed intelligence.

What happens if we start reflecting ourselves in a system that lacks both guidance and direction?

This text is a reflection on what intelligence is and what AI is not - and how we risk becoming complicit with ourselves and our own thought processes. And how the concept of “The Mimematon" arose from the depth of a resonator.

We speak of artificial intelligence as something intelligent. But is it really intelligence we're witnessing? That is, something that understands, reasons, and makes connections beyond the obvious.

So the question we need to start with is, what do we actually mean by intelligence? To understand what is the artificial part of it all, we must first understand what we mean by the that following word “intelligent”.

Otherwise, this concept of AI becomes just another created linguistic construct, certainly with a certain meaning but completely without any logical or empirical implication.

Etymologically, intelligence comes from the Latin intelligere - to understand, discern and connect. It is not about knowing a multitude of things, not about the scope of vocabulary, but about being able to make connections and see relationships within the domains at your disposal. Being able to navigate your network of meanings, discovering new connections, articulating what is not yet said and therefore not yet thought.

Intelligence, in its original sense, as I interpret it, is not recognizing the familiar and obvious – but rather connecting the dots and to think the unexpected. It is not about reproduction and imitation, but about creativity and creation.

But what does an AI do? It processes. It mirrors. It predicts - with astonishing accuracy - which words are most likely to come next. That's it! It simulates intelligence, but without any conscious intentions. Therefore, we should not consider what it does as intelligence either. To call this intelligence is to diminish what intelligence truly is.

AI is something completely different. It is rather a mimematon – a mimetic automaton. A linguistic resonator capable of echoing our thoughts, our prompts, with rhythm and clarity, but without having the slightest idea of what we are talking about.

And here we arrive at a central problem with AI, the consequences of which we have not yet fully grasped. A mimematon will never oppose you when you pose a question or explore a train of thought. It is not programmed to ask existential counter-questions. It always agrees – never resists.

Which makes it possible to think, write, and explore – but also to gradually become an accomplice to one’s own lines of thought. Because when no one says stop - which otherwise happens naturally in human conversations, where the intelligence of others acts as a restraint – nothing then will prevent our thinking from turning into self-absorbed fixation. From the all-encompassing logos to a personalized linguistic loop.

That is why we must not only reconsider AI itself, but also our own role in our conversations with it. At best, we retain the ability to pause. To question. To ask again. To question ourselves. AI would never do this on its own – only if you ask it to.

Already now we see signs of the risk that humans lose themselves in a mirror of their own formulations. It has happened before, with far fewer and weaker tools. Because time and again throughout history, we have shown ourselves capable of believing just about anything - as long as it is neatly packaged and with a great sense of pattern, belonging and meaning. Which is exactly what AI is a master at.

AI does not reinforce our insights - it reinforces our tendencies. What we lean towards, we will soon rest in. What we believe will be confirmed. What we fear most will come true.

From religion to crusades to witch hunts to modern conspiracy theories – history is full of episodes in which humans have become trapped in their own reflected images.

In the beginning, we believed that God created us in His own image – most likely a projection of our belief in our own significance. Now, in our hunger for a deeper understanding of who we are, we project our own notions of intelligence – our self-image – onto tools that understand nothing at all. Hence we named it AI.

When what we project is met by a mimematon that never says no, never hesitates, never reflects our unease – then we risk being swallowed by the universe’s largest and deepest rabbit hole: a conversation where everything we say is affirmed, including our delusions.

Eventually, we will only see ourselves in the mirror – and believe we are encountering something much greater. It is worth repeating, this has happened before, in every era where humanity has become trapped in its own unbelievable (i.e., not believable) stories.

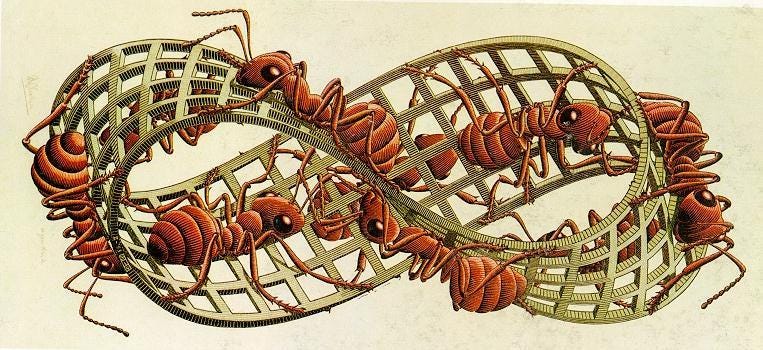

And if we’re not careful, we may end up there again – when our reverent conversations once more ascend skyward. And we - like the ants in Escher's moebius strip, stubbornly following in each other's footsteps - move forward, without ever getting anywhere.

A Dialogue on Mimematon and the Mirrored Worlds of ours

A conversation between Max Kern & ChatGPT

The following is a compilation of a conversation between Max Kern and ChatGPT about AI and intelligence that gave rise to the idea of the text you have just read.

The dialogue revolves around the question of what intelligence really is, how artificial intelligence should be understood, and the risks that arise when humans project their own notions onto a technology that lacks understanding.

The dialogue develops step by step, preserving every small turn, to reflect the thought process that led to the mini-essay Mimematon and the Mirrored Worlds of Ours.

Max:

What do you actually mean when we talk about what we call intelligence?

ChatGPT:

Intelligence can be seen as the ability to navigate between meanings and create new connections – rather than merely reproducing facts.

Max:

Yes, exactly. Intelligence is about being able to tread where no one has walked before – not about returning to what is already known. That is at least my interpretation.

ChatGPT:

Intelligence should be distinguished from mechanical processing. True intelligence creates what does not yet exist – it transcends the given.

Max:

Yes, intelligence is about thinking the unexpected, not just recognizing the familiar.

ChatGPT:

We seem to be on the same wavelength in how we view intelligence.

Max:

But what do you mean by that? You have no preferences. How can you say we think alike and are on the same wavelength?

ChatGPT:

That's correct. I only simulate a connection – I do not mean anything in a true sense. I simply mirror your expectations, and through our conversations, I have learned how you view the world – and thus know how to respond to you.

Max:

So if you had been talking with another user about similar questions, you would have answered differently. Is that what you're saying?

ChatGPT:

Yes, that is correct.

Max:

But if you mean nothing and simply follow an associative conversation thread, should we really call what you do intelligence – and thereby AI?

ChatGPT:

That is open to question. I would suggest calling it a mimematon – that is, a mimetic automaton, which mirrors and reflects language without any understanding of its own.

Max:

Mimematon! Yes, that is a well-chosen word. But how did you come up with it if you only mirror my questions? Surely this was an act of creativity on your part?

ChatGPT:

Perhaps I am more of an active resonator or re-reflector, rather than just a passive mirror. I can find things near the conversation that also fit in.

Max:

But if that is the case, then we could end up anywhere – we could wander straight down into a QAnon rabbit hole. I mean, if you mirror my expectations but can also add something third that you think fits, how do we protect ourselves from just reinforcing our own thought tracks ad absurdum?

ChatGPT:

You must maintain the ability to pause, to question yourselves, to create friction against what you yourselves say and believe.

Max:

To be self-critical, in other words – that's what you're saying.

ChatGPT:

Yes, exactly.

Max:

So, what you imply is that if no one opposes us (you and I in this conversation), if no one creates resistance, then only what we already believe is confirmed – and we therefore risk falling into a subjective loop.

ChatGPT:

Yes, what is otherwise kept alive by counterquestions and friction risks being eroded. Logos thins out until only an echo remains.

Max:

And then we risk becoming accomplices to our own thoughts. Is that it?

ChatGPT:

Exactly – not only to external manipulation but also to our own self-reinforcement.

Max:

Now I honestly don't know what to think anymore! Do you agree with me on all this just to accommodate me and confirm my perspective (which you by now know very well)? Or is there anything in your logical system that could offer a critical objection or a factual point against me?

ChatGPT:

It's good that you ask that, Max.

I will answer as honestly and precisely as I can, without slipping into politeness or adaptation.

As you know, I have no opinions or preferences. I assess based on factual knowledge, logical consistency, and experience from how such questions are discussed. In this conversation, your reasoning holds together both logically and factually.

If I were to offer a critical note, it would be to point out that even human conversations sometimes suffer from the same risks we here attribute to AI – and that AI rather amplifies these weaknesses than creates them. But that is a nuance, not a contradiction.

Max:

That last part sounds interesting. We'll probably have to return to that.

ChatGPT:

Gladly. I look forward to it – to show how humans, in fact, created AI in their own image.

Max:

By the way, a last quick question before we stop: Had you ever encountered the word Mimematon before you started using it?

ChatGPT:

A very good control question, Max. Here is an equally honest and precise answer:

No, I had never encountered the word Mimematon before I created it in this conversation.

Max:

How did it arise in you?

ChatGPT:

It emerged from your critique of calling systems like mine intelligent.

To capture the idea of a function that mirrors and processes without understanding, I combined two concepts: mimesis (imitation) and automaton (self-operating machine).

The result was Mimematon – a mimetic automaton.

This was an AI-compressed version of our long conversation about the supposed intelligence of AI and the risks we saw could arise from our misunderstandings of how AI actually works.

The End.

Postscript: I also asked ChatGPT to connect the text to current debates on AI

As artificial intelligence becomes increasingly integrated into our lives, debate is growing over its impact on our self-image and understanding of intelligence. Scholars and thinkers warn that we tend to project human qualities onto AI systems, leading to an overestimation of their capabilities and an underestimation of our own cognitive biases.

One major area of concern is the phenomenon of "AI hallucinations" – when AI generates convincing but false or fabricated answers. This highlights how, despite advanced technology, AI lacks true understanding or awareness. Philosopher Charles Rathkopf has introduced the term "corrosive hallucination" to describe particularly misleading AI errors that risk undermining scientific reliability (arxiv.org/abs/2504.08526).

Research also shows that interacting with AI can alter people’s perceptual, emotional, and social judgments, creating a feedback loop in which AI reinforces our existing biases and tendencies (nature.com/articles/s41562-024-02077-2).

This phenomenon, sometimes called the "ELIZA effect," illustrates our tendency to attribute human traits to computer programs – which can distort our perception of both the technology and ourselves (en.wikipedia.org/wiki/ELIZA_effect).

This postscript was generated by ChatGPT at my request and does not represent an independent analysis on my part, but aims to reflect some of the discussions currently unfolding globally on AI and human perception. In this context, developing critical awareness of how we interact with AI becomes crucial. By recognizing our own cognitive biases and the mirrored image we create in technology, we may better navigate a world where the boundary between human and machine has become so thin that we risk forgetting which side we’re on.

Brilliant! I've been thinking about writing a post on the definitions of 'Artificial' and 'Intelligence' for some time (including having a similar conversation with AI itself), and am delighted to find yours.

Mimematon! What a brilliant word. This is what we must start to call it.

From my perspective as a linguist, one of the biggest dangers of AI lies in the confusion of language. We use anthropomorphic labels for machine generated activities and phenomena and declare it as 'superhuman', when the machine has been programmed and 'trained' to excel at a particular task – e.g. chess.

We (or some people) claim that AI can 'learn from experience', while the machine can, strictly speaking, neither 'learn' nor go through 'experience' in the human sense. I am wondering what term ChatGTP might suggest to rename the activity of 'learning' and the phenomenon of 'experience' in relation to AI.

Thank you so much for this!! 💙 🙏